AI Coworkers

We argue for a more interactive approach in which AI systems function more like coworkers. For them to be effective in this role, the...

Training Models that have Zero Likelihood

In Optimization, Dec 21, 2019UCL AI Centre

In University College London, Jan 18, 2019Generative Neural Machine Translation

In Natural Language Processing, Sep 12, 2018UCL AI Centre

The AI Centre carries out foundational research in AI. As we transition to a more automated society, the core aim of the Centre is to...

Read MoreAll Stories

AI Coworkers

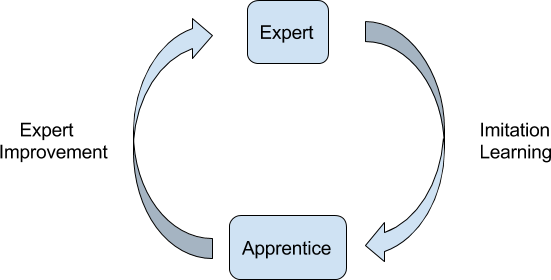

We argue for a more interactive approach in which AI systems function more like coworkers. For them to be effective in this role, they need to have reasonable estimates of confidence ...

In General, Jun 01, 2020Training Models that have Zero Likelihood

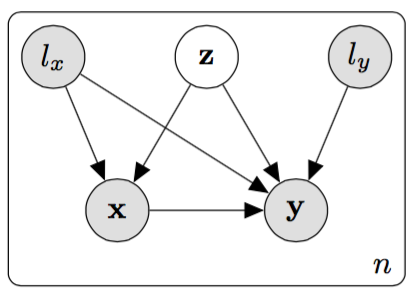

How can we train generative models when we cannot calculate a gradient likelihood?

In Optimization, Dec 21, 2019UCL AI Centre

The AI Centre carries out foundational research in AI. As we transition to a more automated society, the core aim of the Centre is to create new AI technologies and advise on the use ...

In University College London, Jan 18, 2019Generative Neural Machine Translation

Machine Learning progress is impressive, but we argue that progress is still largely superficial.

In Natural Language Processing, Sep 12, 2018Learning From Scratch by Thinking Fast and Slow with Deep Learning and Tree Search

According to dual process theory human reasoning consists of two different kinds of thinking.System 1 is a fast, unconscious and automatic mode of thought, also known as intuition. Sy...

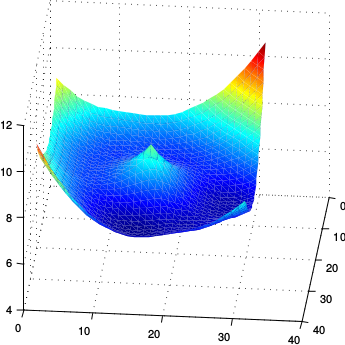

In Reinforcement Learning, Nov 07, 2017Some modest insights into the error surface of Neural Nets

Did you know that feedforward Neural Nets (with piecewise linear transfer functions) have no smooth local maxima?

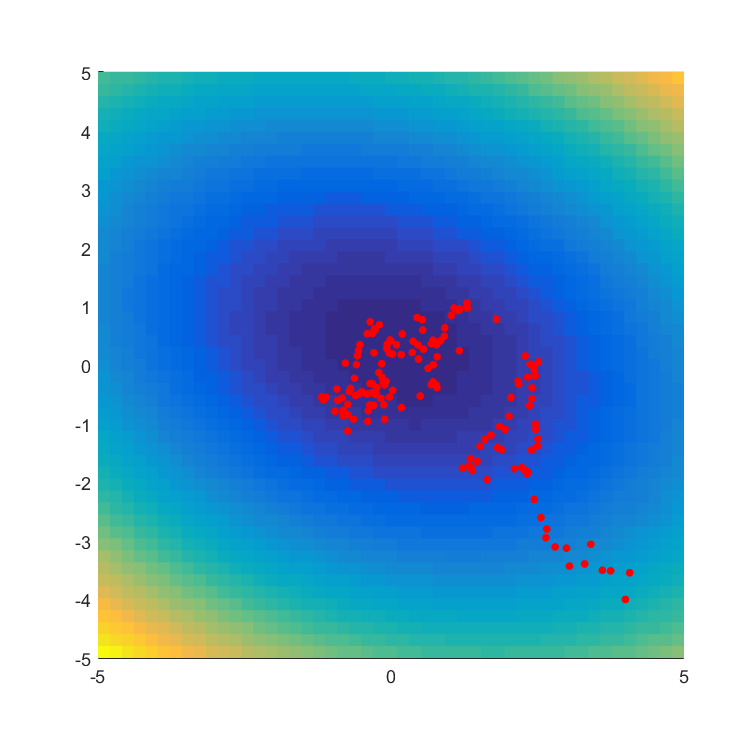

In Optimization, Jul 30, 2017Evolutionary Optimization as a Variational Method

How popular evolutionary style optimisation methods can be viewed as optimisation of a rigorous bound.

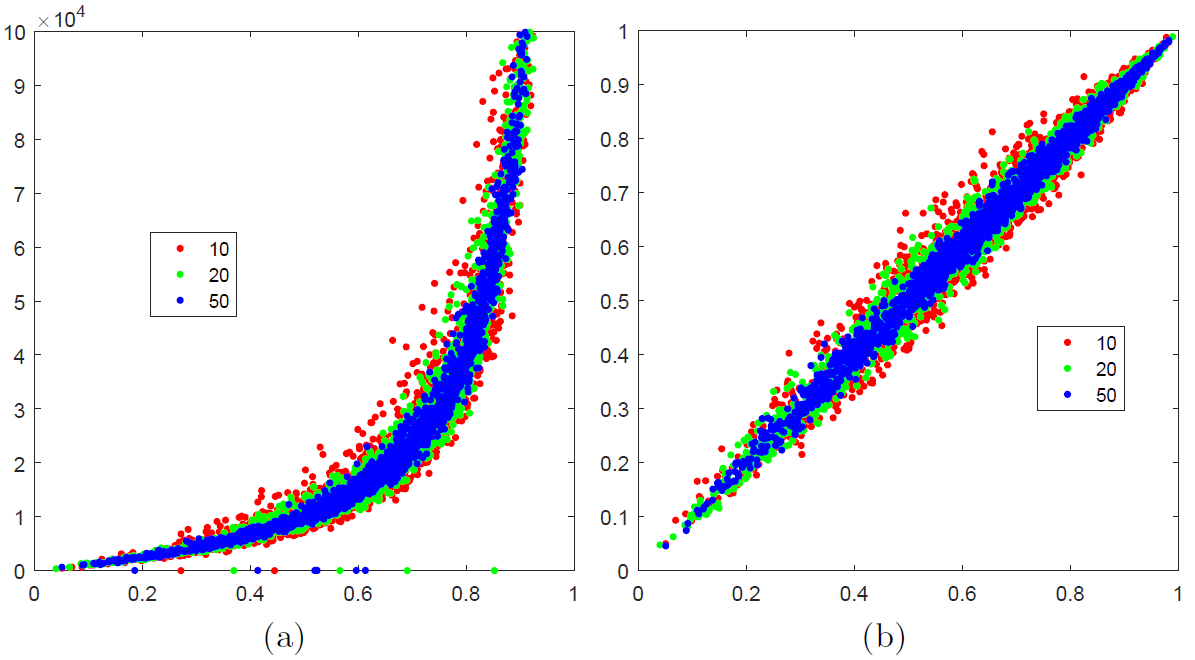

In Optimization, Apr 03, 2017Training with a large number of classes

In machine learning we often face the issue of a very large number of classes in a classification problem. This causes a bottleneck in the computation. There’s though a simple and eff...

In Classification, Mar 15, 2017Featured

-

AI Coworkers

In General, -

Training Models that have Zero Likelihood

In Optimization, -

Learning From Scratch by Thinking Fast and Slow with Deep Learning and Tree Search

In Reinforcement Learning, -

Some modest insights into the error surface of Neural Nets

In Optimization, -

Evolutionary Optimization as a Variational Method

In Optimization, -

Training with a large number of classes

In Classification,